Dive deep into the latest in the cybersecurity world and the AI-Bot, ChatGPT, as we share ten different ways hackers use ChatGPT for hacking and malicious purposes. Let’s get into it.

ChatGPT has changed the digital world, content creation, and everything else within a matter of days, making the jobs of everyone easier on a global scale. But, like every coin, ChatGPT also has a darker side that cybercriminals and threat actors have exploited for quite some time. Here are the top 10 ways threat actors are using ChatGPT for hacking.

10 Applications of ChatGPT that Hackers Are Already Exploiting

1. Writing Sophisticated Phishing Emails

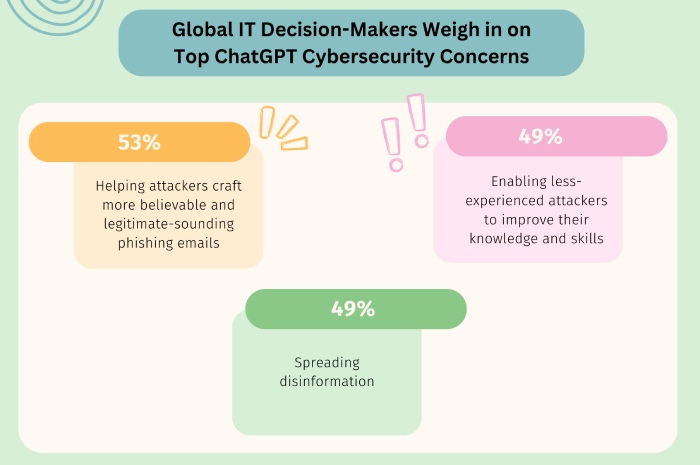

Gone are the days when phishing emails could be recognized by grammatical errors and the failure of cybercriminals to write a convincing note. Threat actors have taken quite a liking to ChatGPT and are leveraging the AI bot to write sophisticated phishing emails.

These ChatGPT-generated phishing emails are persuasive, sophisticated, and carefully crafted with social engineering tactics to make the victim click on malicious links and lose to cybercriminals.

2. Creating Malware to Steal Finances

Despite all the good that it does, ChatGPT is also leveraged by threat actors to create malicious code and malware. Researchers at CheckPoint came across multiple underground cybercriminal gangs utilizing the tool to develop malware.

With Java-based malware and malicious encryption tools to facilitate fraud, threat actors have developed basic iterations to steal money and data from innocent individuals. And in a bit of time, they will be able to enhance these tools and carry out attacks on a large scale.

3. Evasion of Security Products

ChatGPT has an extensive database and can respond rapidly to queries, including debugging. But the feature is not limited to debugging, as you can talk to the ChatGPT to get responses suited to your problem.

Threat actors are exploiting these capabilities and creating polymorphic malware. This malware can evade top-of-the-line security products that do not work with real-time intelligence, making entry into a corporate network easier. If a problem does arise, ChatGPT is swift to provide a workaround.

4. Social Engineering

Threat actors are not limiting the use of ChatGPT and using the tool’s capability to mimic human language for social engineering attacks.

Getting answers from the tool or generating academic papers for students is one thing, but asking ChatGPT to write social engineering emails or give it content and reply to conversations is aiding threat actors in carrying out scams and defrauding innocent individuals worldwide.

Even the most skilled cybersecurity experts are facing a challenge to prevent such attacks as it allows threat actors to adapt to an individual’s behavior or tone.

5. Creating Infostealers

Threat actors can recreate malware strains using the techniques described in publications and articles on the web by feeding this information to ChatGPT and asking it to write the code.

Checkpoint’s researchers confirmed this when they came across a basic info stealer that searched for 12 file types, copied the malware onto these files, and sent them over the web to a victim.

6. Creating Encryption Tools

Threat actors use ChatGPT to create malware and misuse it to create malicious encryption tools.

With the incredible accuracy and versatility of the AI-powered bot, threat actors have developed sophisticated encryption tools that can aid them in creating legitimate-looking email phishing campaigns along with encryption tools to take over a system and encrypt all data, which is the basic premise of ransomware. Once the system is encrypted, the threat actor makes the ransom demand.

7. Creating Dark Web Marketplaces

Checkpoint highlighted in its research that threat actors have also used the platform to create dark web marketplaces for fraud. These generated marketplaces can provide a platform for threat actors to automate the trading of stolen accounts, cards, services, and goods. Even if these are the early days of ChatGPT, threat actors have left no expense on misusing the tool, and it is still gaining more traction.

8. Practicing with Novel Social Engineering Attack Dialogues

ChatGPT is aiding novice cybercriminals to improve social engineering tactics to take on innocent individuals. By communicating with the chatbot and prepping for attack dialogues (inquiries and responses), threat actors are getting adept at understanding what to understand in interaction and preparing themselves for attack campaigns, such as preparing themselves to answer questions as customer care or third-party vendors in impersonation.

9. Exploiting Vulnerabilities and Zero-days

Ask ChatGPT what you want, and it will tell you the best answer. Cybercriminals know this all too well and are using the chatbot’s capabilities and extensive database to ask it how to exploit particular network vulnerabilities and zero-days. Using ChatGPT, even low-level cybercriminal wannabes can bypass sophisticated security networks.

10. Brute Force Attacks

Threat actors are using the AI chatbot for sophisticated brute-force attacks. By asking ChatGPT to create password guesses, threat actors can save their time and become highly efficient in taking control of accounts for malicious activities. Threat actors have found many alarming ways to exploit the bot’s ability to understand and respond to natural language.

Final Words

The capability of AI bots (Artificially Intelligent – Bots) and the craze around chatbots saw a significant spike in the popularity of ChatGPT. And like every revolutionary tech, cybercriminals have also adopted it for malicious purposes. Only time will answer how cybersecurity researchers will evolve their tactics to battle threat actors using ChatGPT.