Cybersecurity in the age of AI: How innovation is empowering both defenders and attackers

The cyber threat landscape has always been complex and evolving, but the advent of AI has empowered threat actors to bypass security filters more easily than ever. They are now penetrating deep into every sector, affecting interconnected operations at multiple levels. AI-backed deception has led to a stage where now a staggering 87% of global organizations have faced an AI-powered cyberattack in the past year.

But why does this conversation matter now?

Well, we are in 2025, which is a pivotal moment in the evolution of cybersecurity— the standard trends and practices are slowly becoming obsolete or less relevant. AI’s unprecedented promises and unparalleled capabilities have accelerated its adoption in cybersecurity, but the truth is that cyberactors are leveraging its functions better than the defenders.

As per IBM’s 2024 Cost of a Data Breach report, 51% of organizations have integrated AI-backed tools and automated systems in their security defense strategy— this records a 33% increase since 2020, and this is huge! The same report also highlights that companies that deployed AI-driven tools experienced an average of $1.76 million lower breach costs than those that are still stuck with the pre-AI era technology. These statistics are evidence of the fact that AI has unmatched capabilities to streamline detection, automate responses, and reduce damages.

However, here is the flip side: the same AI technology is making hackers attempt chilling cyberattacks with sophisticated videos and messages that are devoid of any conventional red flags. The FBI also issued public notices warning businesses and general users of criminals using generative artificial intelligence to facilitate financial fraud.

Now we are in a time when AI is not just a back-office utility but rather a frontline technology that is used by both defenders and attackers. This underscores the urgency to adopt modern cybersecurity measures for a sustainable business existence.

AI’s swift expansion in cybersecurity and beyond

AI is not a secret; it’s available for open access and has become a foundational layer across sectors. If we talk about cybersecurity, then AI is being deployed in intrusion detection systems (IDS), Security Information and Event Management (SIEM) platforms, endpoint protection tools, threat intelligence feeds, and even in user behavior analytics (UBA).

From a cybersecurity leader’s point of view, this expansion is not just logical, but necessary. As the cyber threats are now being driven by AI-backed engines, they are evolving in speed, complexity, and volume. And there is no doubt that traditional tools can’t keep up.

AI is bridging the gap by introducing never-seen-before speed, scalability, and foresight to cybersecurity. In today’s security operations, many of the alerts are false alarms, but with AI’s involvement, things are changing. AI doesn’t just sit back and wait— it’s instead designed to learn from past attacks, which empowers it to pick up new patterns and respond in real-time, often in just milliseconds. This helps mitigate the damage to a great extent.

This kind of responsiveness is not humanly possible; hence, the integration of AI into cybersecurity makes all the sense!

Justifying the ‘double-edged sword’ metaphor for AI

There are tons of articles online stating that AI is a double-edged sword. It’s a right metaphor that accurately reflects the AI’s dual role in the cybersecurity landscape. On one edge, it helps security personnel detect and contain threats at an unmatched machine speed. But, on the other edge, it’s exploited by adversaries to craft convincing and sophisticated phishing emails, deep fake videos, voice messages, deceiving graphics, and even malicious codes for as low as USD 50!

All of this makes it easier for them to launch faster, precisely targeted attacks that have a great chance of tricking victims into sharing sensitive details, wire transferring money, downloading malware-infected files, and whatnot!

Take phishing, for example. Older detection tools usually look for certain keywords or odd email patterns to catch scams. But AI has changed the game. Now, phishing emails can be written flawlessly, personalized using scraped data from the internet, and made to sound just like real conversations—making them almost impossible to spot.

What’s even more worrying is that the same AI used to detect threats can also be used to beat security systems. In 2024, researchers at Black Hat showed how generative AI can create polymorphic malware—code that constantly changes itself to avoid getting caught by traditional antivirus tools.

Simply put, AI isn’t just making attacks stronger—it’s completely changing how the game is played.

How is AI transforming cybersecurity for good?

Let’s first discuss how AI is being a hero in the cybersecurity progression-

AI-powered threat detection

There is no doubt that cyber threats are growing in both volume and speed, making it challenging for human teams and conventional technology to manage and ward them off. But AI is helping sort out the issue with its ability to focus on patterns and behaviours to spot anomalies.

It learns what ‘normal’ looks like across your network—how users log in, what files they touch, how devices behave—and then spots anything that seems off. This shift from rules to behavior is a game-changer.

Take something like UEBA (User and Entity Behavior Analytics). AI quietly tracks patterns like when employees usually log in, what systems they use, and how they move through the network. So if someone suddenly logs in from a different country at 3 AM and starts downloading sensitive data, AI flags it immediately—even if there’s no known malware involved.

Another solid example is AI-powered endpoint detection. Let’s say ransomware starts locking files on someone’s laptop. Tools like CrowdStrike or SentinelOne don’t wait to match a virus signature. They spot unusual behavior, like rapid file encryption or strange registry changes, and can shut that device off from the rest of the network within seconds to stop the attack from spreading.

Even phishing protection has evolved with AI. Gmail’s AI filters or Microsoft Defender now go beyond just scanning for dodgy links. They analyze how an email is written, the tone, the context, and whether it mimics your usual contacts, helping catch even polished, AI-generated scams.

For businesses, the payoff is huge. AI helps detect and respond to threats much faster. In fact, companies using AI spot breaches nearly a month sooner than those that don’t—and that can save millions in potential damages. It also cuts down on alert fatigue for security teams, letting them focus only on what really matters.

Automated incident response

One of the most significant shifts brought by AI is how companies handle incident response. Earlier, they were dependent on manually investigating every single alert, triaging threats one by one, and aligning responses to form a definite answer– this was just like walking through a maze of disconnected tools, hoping you reach a conclusion. But AI has changed the picture completely. Today, incident responses are done through fast and accurate tools, helping the security team react in real-time.

An average ransomware attack can intercept and encrypt critical files in just a few minutes after gaining initial access. But with the help of AI-powered tools, such attacks are detected in their prime stage, followed by automatically isolating affected endpoints, blocking suspicious IPs, or triggering pre-defined playbooks. All this can be done without human intervention.

Let’s take a phishing attack as an example. Imagine someone tries to impersonate your company’s CFO and sends an email asking for a quick wire transfer. If AI is in place, it can immediately spot the email as suspicious, block it from reaching the inbox, warn the user, and check if similar emails were sent to others. It can even update the system to prevent future emails like this from slipping through. What used to take hours to investigate and fix can now be done in just seconds.

This kind of instant, automated response is a huge advantage, especially for companies that don’t have big security teams. AI systems can run 24/7, step in the moment something looks off, and only bring in human experts when it’s really needed. That means threats are stopped faster, and there’s far less risk of major damage. This is precisely why 75% of US and UK security practitioners adopted AI tools, recognizing their effectiveness in detecting previously undetectable threats.

And it’s not just about speed. AI makes responses more reliable. People can miss steps when they’re under pressure, but AI follows the same process every time—no shortcuts, no errors. It can even manage hundreds of issues at once, which would be impossible for any human team to handle alone.

Predictive threat intelligence

AI has made it possible for the CISOs to be predictive and not just reactive. Predictive threat intelligence simply means anticipating cyberattacks before they happen. This helps mitigate the damage and portrays your defensiveness.

AI helps in this by making it possible to analyze the vast amount of threat data from across the globe, including malware signatures, IP addresses, dark web chatter, and attack vectors. This is followed by connecting the dots to get a clear montage of the threat. And AI does all this in almost real-time, which is beyond human capabilities.

For example, if an AI system detects that a new phishing kit is spreading rapidly in one region, it can alert companies in other regions with similar risk profiles to prepare in advance.

Spotting and warding off the emerging threats helps businesses make their systems stronger and patch vulnerabilities in synergy rather than in haste and panic.

AI in email security

Emails are one of the most vulnerable modes of communication. Cybercriminals often try to gain unauthorized access to email accounts to intercept or modify content, send phishing emails on behalf of credible people, launch MiTM attacks, or simply steal sensitive information. Business email compromise, spoofing, phishing, and CEO fraud are some of the top email-based cyber threats attempted across the world.

The situation has worsened so much that in 2024, about 64% of businesses were hit by Business Email Compromise (BEC) attacks, each costing them around $150,000 on average. These scams usually target employees who handle money by pretending to be company executives or trusted partners. Not just private companies but also government bodies are being impersonated under the pretext of claiming overdue taxes or fines. This fraud has increased by 35% in 2025 compared to last year.

Looking at these statistics, even Google, Yahoo, and Microsoft have stepped up to make DMARC a requirement for bulk senders. DMARC works efficiently in instructing recipient servers not to interact with potentially fraudulent emails. But on its own, it follows some fixed rules. This is where AI comes in to add an extra layer of intelligence by digging deeper into things like email headers, SPF/DKIM alignment, sending behavior, and unusual patterns in real time.

AI is all the more useful in detecting trickier, tactical threats that include lookalike domains, which a basic DMARC might miss. It scans millions of signals, from metadata to language tone, and compares them to what’s normal for your domain. A human team just can’t keep up with that level of detail and speed.

AI also helps evaluate complicated DMARC reports by converting them from XML to simple English. It highlights misaligned senders, suggests policy updates, and even predicts which email sources might cause issues when you move to a stricter DMARC setting like p=reject.

The dark side: How cybercriminals exploit AI

As many as 87% of the organizations were affected by AI-driven cyberattacks in the past year. This includes spotting vulnerabilities, launching campaigns using suitable attack vectors, penetrating deeper into the attack surface, establishing backdoors within systems, stealing or intercepting data, and tampering with system operations.

Since AI-powered cyberattacks can learn and evolve over time, this dark side of AI is expected to get only darker in the coming years. Here’s how AI is being exploited currently for attempting highly sophisticated cyberattacks:

AI-generated phishing emails

The use of AI in creating convincing and flawless phishing emails has become a new ‘obvious.’ These emails are so well-written that even some tools fail to distinguish them from legitimate communications, jeopardizing safety at multiple levels.

In fact, it was revealed in a controlled test done on 1600 employees in 2023 that ChatGPT-crafted phishing emails achieved an 11% click rate, closely rivaling the 14% click rate of human-written emails. And let’s not forget the fact that this experiment was done in 2023— when ChatGPT was in its nascent stage. In the last two years, the gen-AI platforms have seen a significant improvement, which means that in today’s time, these tools can create even more convincing and flawless emails.

Moreover, threat actors are now setting up fake websites to offer services like resume writing, graphic design, or task automation. These platforms are meant to make daily tasks easier, but attackers are misusing the information people share with them to carry out bigger cyberattacks.

Automating reconnaissance and target selection

Threat actors are now using AI tools to automate the early stages of cyberattacks, especially reconnaissance and target selection. Instead of manually scanning websites, social media, or employee directories, AI can gather, filter, and analyze huge amounts of publicly available data in minutes. This helps attackers choose the most vulnerable or valuable targets with minimal effort.

Here’s how they use it:

- Automated data mining: AI scrapes LinkedIn, GitHub, and company websites to collect employee names, roles, and email formats.

- Vulnerability scanning: Tools powered by AI scan thousands of IPs and domains to find open ports, outdated software, or weak configurations.

- Target prioritization: AI ranks targets based on job roles, access levels, or exposed data, helping attackers focus on those most likely to fall for phishing or have access to critical systems.

.

Deepfakes and voice cloning for social engineering

Cybercriminals are now using AI to create scarily real voice clones and deepfake videos to trick people. With just a few seconds of someone’s voice, like a CEO or manager, they can clone it using AI and make fake phone calls that sound completely real.

The same thing is happening with deepfake videos. Attackers are creating fake video calls where it looks like a real executive or team member is speaking, but it’s actually a computer-generated face and voice. In early 2024, a company in Hong Kong was tricked into transferring $25 million after an employee joined a video call with what seemed like their senior leadership—all of them turned out to be deepfakes.

These scams work because they feel real. The voice sounds familiar, the face looks right, and the message feels urgent. That’s what makes AI-powered social engineering so dangerous—it removes the usual red flags and makes people act before they have time to think.

AI for password cracking and brute force attacks

Now, there is a whole new category of AI-backed tools that malicious actors are using to crack passwords faster and more cleverly than ever before. Tools like PassGAN are trained on real leaked passwords, which makes them quite accurate in guessing new passwords that look very similar to the way real people create them.

The older tools were mostly based on fixed rules or wordlists, but the new-age AI-backed password-guessing tools can figure out human-like password patterns on their own, which makes brute-force attacks much more effective.

Classic tools like Hashcat and John the Ripper have also been upgraded with AI, letting attackers test billions of combinations in seconds. These tools can spot common tricks like replacing letters with symbols or mixing multiple words together.

With AI in the mix, it takes far less time to break into accounts—making strong, unique passwords and multi-factor authentication more important than ever.

Malware evasion with AI

With the integration of AI, malware are getting smarter and harder to detect. Threat actors are no longer relying on static, hardcoded malware. They are now capable of building AI-enhanced malware that can learn, adapt, and even evade traditional security tools in real-time. With such technology, these malware mimic the genuine process and even delay its execution if it feels the environment isn’t safe enough. All these are standing as concrete challenges for the defenders.

The basic techniques involved in these malware are-

- Polymorphic malware creation: AI helps generate malware that rewrites its own code on the fly, making each version look different while keeping the core malicious behavior intact—evading signature-based detection tools.

- Adversarial AI techniques: Attackers feed misleading inputs to machine learning models used by antivirus or EDR systems, tricking them into classifying malware as safe software.

- Behavioral masking: Malware uses AI to monitor the environment and adjust its actions to avoid raising any behavioral red flags that might alert advanced detection systems.

In fact, last year, cyberactors used GenAI to write malicious code to spread AsyncRAT, an easily accessible, commercial malware that can be used to control a victim’s computer.

Data poisoning and model inversion attacks

Data poisoning and model inversion are not really the highlights of the cybercrime world, as they aren’t loud and obvious types of attacks. They usually happen in the background, but end up compromising the integrity and privacy of systems, businesses, and individuals in serious ways.

Data poisoning works by targeting the training data on which a model explicitly relies when responding to a user’s request. One of the data poisoning approaches includes injecting malware into the targeted system so that it can be corrupted effectively. In an incident reported in February 2024, researchers uncovered almost 100 machine learning models that were uploaded to the Hugging Face AI platform. This trickery enabled hackers to load models that secretly contained malicious payloads onto the repository.

Model inversion flips the game entirely. Instead of messing with how an AI model learns, attackers try to work backwards and figure out what kind of data the model was trained on. This could mean recreating faces, personal health details, or other private information just by studying how the model responds to certain inputs.

Since these AI models are widely available through cloud platforms like OpenAI or Google Vertex, it’s getting easier for attackers to try this, especially when the models are open to the public through APIs.

Sector-wise impact of AI-powered cyber threats

AI-based cyber threats have penetrated deep into the system. Here are the most targeted sectors–

Healthcare: AI-driven ransomware targeting patient records

In 2024, the healthcare sector faced unprecedented cyber threats. A staggering 67% of healthcare organizations reported ransomware attacks, a significant increase from 60% in 2023. These attacks led to an average recovery cost of $2.57 million per incident.

Gen-AI is one of the most highly fueled driving forces behind these attacks. AI has amplified the capabilities of ransomware, enabling attackers to craft more sophisticated and targeted campaigns. By analyzing vast datasets, AI can identify vulnerabilities in healthcare systems, making it easier for cybercriminals to infiltrate networks and encrypt critical patient data.

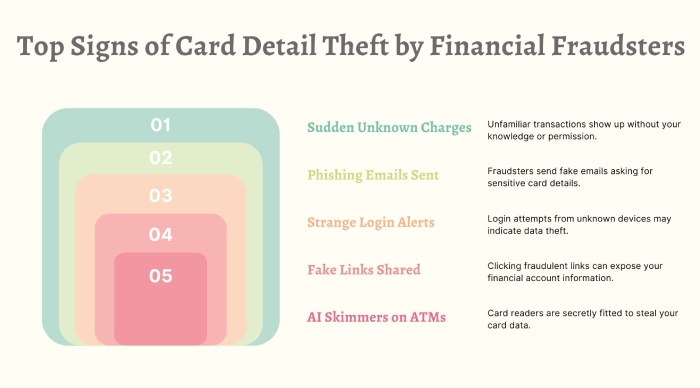

Finance: Deepfake CEO fraud and AI in ATM skimming

The finance sector has never been safe, but the advent of AI has exposed each of its systems to threats. In 2024, over 50% of businesses in the U.S. and U.K. were targeted by financial scams using deepfake technology, with 43% falling victim to these attacks. These videos mimic C-level executives to manipulate junior employees into sharing login credentials, wire transferring money, revealing sensitive information, etc.

ATM skimming, a kind of financial fraud where bad actors steal card details when you use an ATM, has also become smarter with AI. Even though the number of compromised debit cards dropped by 24% in 2024, the tools used by scammers have gotten more advanced. AI-powered skimmers can now adjust to different ATM and use machine learning to avoid being spotted by regular security systems.

Manufacturing and industrial control systems: AI targeting IoT and SCADA systems

In 2024, nearly 26% of all cyberattacks targeted the manufacturing industry, making it one of the biggest hotspots for hackers. Since the industry depends so much on connected systems and data, it’s become a prime target for cybercriminals looking to cause disruption or steal valuable information.

Also, modern manufacturing operations heavily rely on IoT devices and Supervisory Control and Data Acquisition (SCADA) systems to automate production, monitor machinery, and control operations. These systems lack strong in-built defense systems and are rarely patched. This is where attackers leverage AI; they analyze the complex environments, spot exploitable vulnerabilities, and launch precision attacks that can shut down the entire chain of operations.

Moreover, this industry has a low tolerance for downtime– even short outages can result in loss of millions. Threat actors use AI to study network behavior and launch ransomware attacks at the most vulnerable or high-stakes moments. Since manufacturers are more likely to pay ransoms to avoid costly downtime, this makes them a profitable target.

Education and research: Credential theft and intellectual property attacks using AI

Education and research institutes are increasingly targeted for AI-driven attacks because they run on legacy systems and open-access networks, and store a vast amount of sensitive data.

In June 2024, a major breach exposed the personal information of over 46,000 university students, including names, email addresses, and student IDs—data that can easily be used for identity theft, phishing campaigns, or account takeovers.

AI tools make these attacks even more dangerous by automating credential harvesting at scale, analyzing login behavior, and cracking weak or reused passwords across student and faculty accounts.

Government and critical infrastructure: Nation-state AI-driven cyber espionage

Nation-state attackers are now using AI to go after government systems and critical infrastructure, and the stakes couldn’t be higher. These systems don’t just store sensitive data like citizen records or intelligence files—they also control essentials like electricity, water, and communications. Disrupting any of these can cause chaos and even threaten national stability.

AI makes these attacks smarter and faster. Instead of manually scanning for weaknesses, attackers can use AI tools to automate the process, find vulnerabilities quicker, and launch malware that adapts based on its surroundings. This means attacks are not only harder to detect, but they can also evolve in real time.

A real example of this happened in 2024, when Russian cyber spies used AI-assisted tools to hack Mongolia’s Ministry of Foreign Affairs. They stole browser cookies to access sensitive session data without needing passwords.

These aren’t just ordinary hacks anymore—they’re carefully planned, AI-driven operations that can have serious national and global consequences.

AI and the rise of autonomous cyberattacks

Autonomous cyberattacks are those that are directed on their own by the force of AI algorithms. These attacks are capable of inflicting serious monetary and reputational damages, primarily because they operate without requiring human oversight to command them. This feature makes autonomous cyberattacks faster, more adaptive, and harder to trace.

Because of this technology, threat actors can now perform the following tasks that earlier required highly skilled people-

- Automated reconnaissance: Scanning networks to identify potential targets and vulnerabilities.

- Adaptive exploitation: Adjusting attack strategies in real-time based on the target’s defenses.

- Self-replication: Spreading across networks without human guidance.

Morris II- the infamous autonomous malware

The Morris II malware is an appropriate example of self-replicating malware designed to exploit Gen-AI systems. This particular malware targets AI-powered email assistants using self-replicating prompts that prompt the models to recreate the input as output. This lets the malware spread autonomously across the interconnected AI systems without requiring any human to step in and oversee the process.

Once inside a system, Morris II can perform the following malicious tasks-

- Data exfiltration: It can extract sensitive personal data from infected systems’ emails, including names, phone numbers, credit card details, and social security numbers.

- Spam propagation: The worm can generate and send spam or malicious emails through compromised AI-powered email assistants, facilitating its spread to other systems.

Researchers tested Morris II against three different GenAI models: Gemini Pro, ChatGPT 4.0, and LLaVA. The tests evaluated the worm’s propagation rate, replication behavior, and overall malicious activity, making it an extremely dangerous creation.

Ethical and regulatory blind spots- who is responsible when AI is misused

One of the biggest questions in AI and cybersecurity is—who’s to blame when something goes wrong? If an AI tool is used to write a phishing email, spread malware, or clone a CEO’s voice, it’s not always clear who’s at fault. Is it the developer who built the model? Is the company using it? Or the hacker who misused it?

Right now, there are no clear global rules around this. So when AI is involved in a cyberattack, it’s hard for victims to hold anyone accountable. This gap in responsibility makes it easier for bad actors to get away with misuse and harder for organizations to protect themselves.

No global rules

AI is advancing fast, but regulation isn’t keeping up. Most countries don’t have strong laws around how AI can or can’t be used in cyberattacks. A few regions, like the EU and U.S., have started working on AI safety rules, but there’s no global standard. This makes it easy for attackers to operate across borders and hide in legal grey zones.

Moreover, open-source AI models (like pubic code or language tools) are easily available to everyone on the internet. While these tools are valuable for learning and innovation, adversaries use them maliciously. This has possibly given birth to many cyberactors who never wanted to go in this direction, but the curiosity to misuse these tools pivoted their path for the worse.

Challenges for cybersecurity teams

Until the last few years, AI wasn’t considered harmful— in fact, it was a technology for the next generation. But now AI has penetrated so deeply into the cybercrime world, both for attackers and defenders, that CISOs are finding it hard to cope up with the following challenges-

Skills gap in AI and ML

Cybercriminals are moving fast with AI—automating phishing, creating shapeshifting malware, and even launching self-spreading attacks. But defenders are struggling to keep up. Most cybersecurity pros know networks and threats well, but many lack training in AI, data science, or how machine learning models can be misused. This makes it tough to spot or stop AI-generated attacks.

The problem gets worse with the shortage of skilled talent. Many teams don’t have people who can build or manage AI-based defense tools. In fact, a 2024 ISC² report found that over 60% of cybersecurity teams lack the AI/ML skills needed for advanced threat detection. This talent gap is slowing down how fast companies can respond to smarter, AI-driven threats.

Alert fatigue due to automated false positives

Modern security tools use AI to catch threats quickly, but they often trigger way too many alerts, most of which are false alarms. When the system isn’t well-tuned, even harmless activity gets flagged, leaving teams buried under hundreds of notifications every day.

This constant noise is exhausting for security analysts. They have to sort through all the clutter just to spot the few real threats. And when AI-powered attacks come in—fast, sneaky, and smart—there’s a real risk that the important alerts get lost or ignored.

In the long run, this kind of alert fatigue leads to burnout, slower reactions, and even missed breaches, and this is exactly what attackers count on.

Integration complexity in legacy systems

Legacy infrastructure, often built decades ago, was never designed to handle today’s level of sophistication in cyber threats, especially those powered by AI. These older systems typically lack modularity, API compatibility, and real-time monitoring capabilities, making it incredibly difficult to plug in modern AI-based security tools without overhauling entire environments.

Even when integration is possible, it’s often partial, patchy, or riddled with compatibility issues that reduce the effectiveness of threat detection.

This becomes a serious issue when facing AI-driven attacks that adapt, learn, and evolve in real-time. For instance, AI-powered malware can test different entry points or camouflage itself based on system behavior—something legacy systems can’t recognize or respond to quickly.

Prevention and defenses in the era of AI

Defending systems in the era of AI requires a shift from traditional, reactive security to a more proactive, intelligent approach. Organizations must invest in AI-powered cybersecurity tools that can detect threats in real time, learn from evolving attack patterns, and respond automatically to reduce damage.

Equally important is building a layered defense strategy that includes multi-factor authentication, zero trust architecture, endpoint protection, and continuous monitoring. Human oversight still matters—so regular employee training, red-teaming exercises, and threat simulations are key to staying sharp.

Businesses should also secure their AI systems themselves, ensuring training data isn’t poisoned and models aren’t exposed through unsecured APIs. Ultimately, prevention in the AI era isn’t about one tool—it’s about creating a flexible, adaptive security ecosystem that can keep pace with intelligent threats.