How to spot and dodge AI impersonation attacks?

AI is everywhere, from your smartphones and home appliances to high-efficiency systems in workplaces and industries. It is officially the era of artificial intelligence, where bots have taken over almost every domain, including cybersecurity.

AI in cybersecurity has its own pros, but we cannot overlook how cyber attackers are leveraging this technology to execute impersonation tactics and launch grave attacks.

You might have heard of deepfake videos, synthetic voices, phishing chatbots, etc— all of this is possible through AI. Earlier, impersonation tactics like these were not this rampant and sophisticated, primarily because it was a complex and resource-intensive process to pull them off. Thanks to AI, cyber attackers can now impersonate almost anyone, from celebrities to common folks.

Since no one can now escape the grasp of such cyberattacks, it is crucial to know more about these attacks and how you can spot them so that you don’t find yourself falling victim to them.

How do cybercriminals leverage AI to carry out impersonation attacks?

Cybercriminals are getting smarter in their techniques by using AI to dupe unsuspecting targets into believing that they are interacting with trusted people. They leverage AI to make the impersonation attacks more persuasive and very difficult to detect. They create deepfake videos and voice clones that can look and sound like someone you know, such as your colleague, client, or even family member, to get you to divulge confidential information or send money.

Apart from this, phishing chatbots are also among the most common techniques that impersonators use to pose as customer service representatives, who chat with you in real-time and try to collect passwords or bank information from you.

Additionally, they send emails that seem like from someone you know and trust, making the message seem even more real. On social media, they create fake profiles that resemble your friends or co-workers and gain your trust before making a request. They utilize AI to find information about you online and know things that will make their impersonation even more believable.

How do you identify AI-powered impersonation attacks?

Back in May 2024, the FBI issued an advisory highlighting the growing menace of AI-based attacks. While it might seem like any other news or warning, the fact that it came from the FBI, tells us a lot about how serious things are.

Since we can’t stop technological advancements or control how they’re used, we need to be fully prepared to tackle the challenges they bring.

The good news is that recognizing AI impersonation attacks is not as challenging as you think it is. No matter how well-crafted the attack is, it will most often have a loophole or subtle clues that can give it away.

Here, let’s delve into the strategies that you should know of when it comes to identifying and blocking AI-driven impersonation threats:

Steer clear of unusual requests

If someone suddenly asks you to do something unusual or request financial transfers, take it with a pinch of salt. It could be anything: asking you to transfer money, sharing your personal details, or giving access to sensitive information. When these requests seem to come out of nowhere, there’s a chance it’s not actually the person you think it is. It could be attackers using generative AI tools to craft a message that urges you to respond without giving it a second thought.

Look for contextual anomalies

Unlike the messages written by human beings, AI-generated ones usually lack a personal touch and nuances. If you receive a fraudulent message, you will notice that it lacks personal context or subtle details that someone you know would usually include. Either the language might sound too formal or slightly awkward; sometimes the message might not have specific references that you were expecting; something about the message would certainly be off.

For example, if you’re looking to create personalized content or video messages that stand out, you might consider using a free training video maker. These tools can help you design more engaging and human-like messages, offering features that allow for a more customized touch that AI typically misses.

Beware of those emphasizing confidentiality

If you get a message that incessantly talks about how ‘confidential’ or ‘private’ it is, hold on! Most of the time, scammers use such words to make the request appear so sensitive and urgent that you should not even talk about it with anybody. Here, they capitalize on the basic human tendency of acting in haste when under pressure.

Look for poor synchronization in A/V

Audio-visual asynchrony is one of the significant signs of an AI-generated or manipulated video. For instance, if you notice that the speaker’s lip movements do not match the audio, then it is probably a deepfake or edited video. In most AI impersonation clips, you will see bad A/V sync, especially with fast-moving things or complicated expressions, so such discrepancies are quite easy to spot.

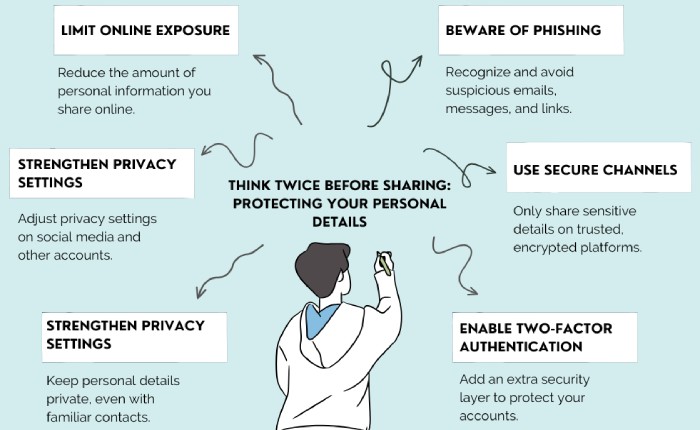

How do you protect yourself from impersonation threats?

Now that you know what AI-powered cyberattacks look like, let’s move on to the strategies that you can employ to defend yourself against these attacks. After all, mere knowledge of what such attacks look like isn’t enough; you have to actively work toward building a robust defense mechanism.

Develop an incident response plan

While it’s nearly impossible to be totally immune to AI-driven attacks, what’s important is how you bounce back after being hit by one. A well-thought-out incident response plan will do much in terms of reducing damage and getting back to business. While developing this plan, your focus should be on creating a framework for detecting, investigating, and recovering from attacks. Among other things, you must also regularly test and update the plan to keep up with the pace of ever-evolving threats.

Deploy a multi-layered approach to security

Sophisticated cyberattacks driven by AI call for equally sophisticated defenses. This means your emails, accounts, and sensitive information are no longer safe with a mere password; you need something more powerful. A good starting point is multi-factor authentication (MFA), which requires multiple types of verification and makes it difficult for the impersonators to get into your account even if they have your password.

As for protecting your email communications, email authentication protocols like SPF, DKIM, and DMARC are your go-to tools for authenticating your outgoing messages and confirming that emails claiming to be from your domain are actually genuine. Talk to us to get started with SPF, DKIM, and DMARC.